In recent years, artificial intelligence (AI) has come to the forefront of technological innovation, and among its most impressive feats is natural language processing (NLP). The Generative Pre-trained Transformer, or GPT, series by OpenAI stands as a testament to how far NLP has come. Let’s embark on a journey from GPT-1 to the current marvel, ChatGPT, and witness the evolution of a technology that’s transforming our digital conversations.

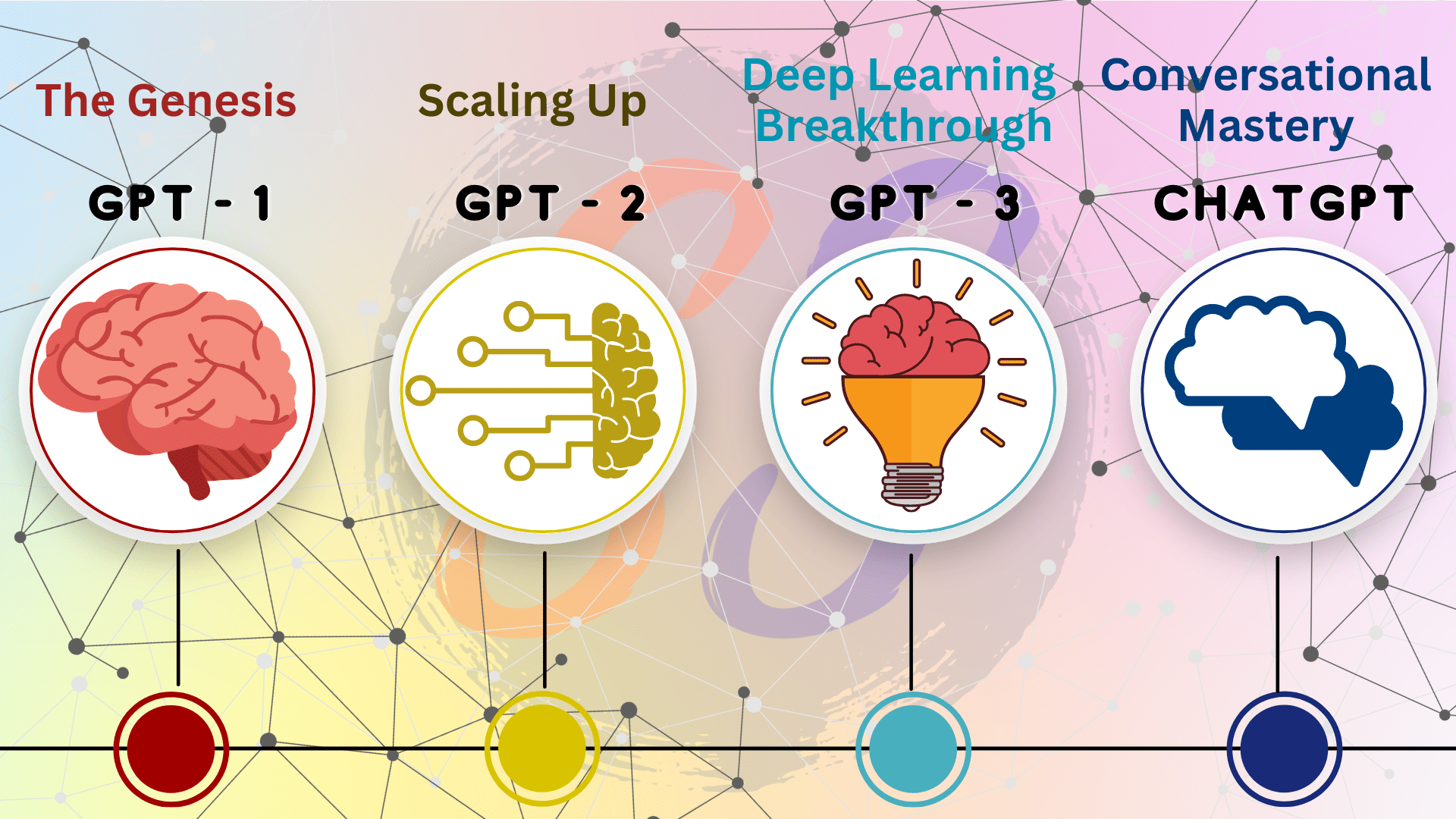

GPT-1: The Beginning

Launched in June 2018, GPT-1 was the first in a series that aimed to revolutionize machine understanding of human language. With 117M parameters, it was trained on the BooksCorpus dataset. GPT-1 showcased the potential of transformers, a novel type of deep learning model, in handling a range of NLP tasks without task-specific training.

GPT-2: The Breakthrough

The world took notice when OpenAI introduced GPT-2 in February 2019. Boasting 1.5 billion parameters, this model was a giant leap from its predecessor. OpenAI initially withheld the full model, citing concerns about potential misuse. GPT-2 demonstrated capabilities in tasks like translation, question-answering, and even rudimentary reading comprehension. Its ability to generate coherent, diverse, and contextually relevant paragraphs of text was particularly striking.

GPT-3: The Game Changer

2020 witnessed the release of GPT-3, the third iteration, and it dwarfed its predecessor with a whopping 175 billion parameters. Its vast scale and extensive training on diverse internet text allowed for unprecedented capabilities. GPT-3 could draft essays, answer questions, create poetry, and even code in Python. The model’s adaptability led to a myriad of applications, from content creation to customer service bots.

ChatGPT: Fine-Tuning Excellence

Building on the foundation of GPT-3, ChatGPT was designed with a specific focus on improving conversational abilities. By fine-tuning GPT-3 on a mixture of licensed data, data created by human trainers, and other sources, ChatGPT showcased a nuanced understanding of user prompts. This iteration was a nod to the growing demand for chatbots and conversational agents in various industries.

The Core Technology: Transformers

Central to the success of the GPT series is the transformer architecture. It uses self-attention mechanisms to weigh input data differently, making it adept at understanding context within language. This has made transformers, initially introduced in the paper “Attention Is All You Need” by Vaswani et al., the backbone of many NLP breakthroughs.

Challenges and Ethical Concerns

As with all groundbreaking technologies, the GPT series brought its share of challenges. The risk of misuse, from generating fake news to abusive content, remained high. OpenAI took measures, like moderating outputs and refining system behavior, to address these concerns. User feedback played a pivotal role in ensuring the technology remained ethical and safe.

The Road Ahead

From GPT-1’s modest beginnings to ChatGPT’s conversational prowess, the journey has been transformative. The rapid advancements within a short time frame hint at an even more promising future. As OpenAI continues to refine and expand upon the GPT series, one can only imagine the NLP milestones yet to come.

Conclusion

The GPT series by OpenAI is more than just a technological marvel; it’s a testament to human ingenuity and the endless possibilities of AI. As we stand on the cusp of even greater NLP advancements, reflecting on this journey from GPT-1 to ChatGPT offers both inspiration and anticipation for the future.